Driving confidence in AI: Foundational distributional testing explained through a RAG example

In this article, I’d like to share some examples to help readers gain intuition around what it means to statistically test a RAG app, using the example of a knowledge base on every engineer’s favorite topic, cats. The goal is to help develop some intuition around the type of data collected when running a GenAI app, and how this can be helpful when understanding the behavior of the app. In this example, we are looking at a situation with changing user behavior, but we can easily imagine a scenario where it’s one of the components or the RAG itself that is causing problems.

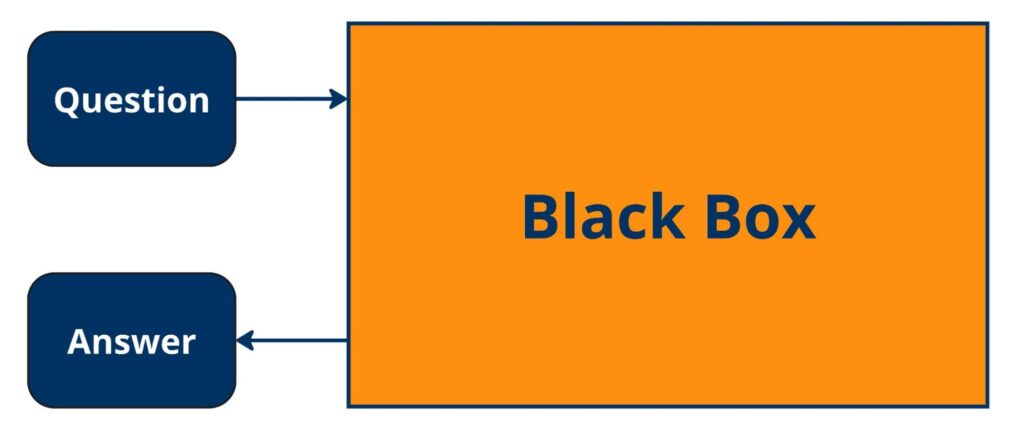

Looking at a RAG app as black box

Let’s start with how users see a RAG app. On the surface, a RAG app is just a black box—a user asks a question, and the app gives back an answer.

When we ask a question: “Why do cats meow?”

We get an answer back: “Cats typically meow primarily as a form of communication or attention-seeking, rather than due to hunger or need for food. Meowing can indicate pleasure, excitement, or affection, often when a human is nearby or trying to interact with the cat.”

[{'Question': 'Why do cats meow?',

'Answer': 'Cats typically meow primarily as a form of communication or

attention-seeking, rather than due to hunger or need for food.

Meowing can indicate pleasure, excitement, or affection, often

when a human is nearby or trying to interact with the cat.'}]Whether or not we were actually able to answer the question correctly is not the topic of this post. For now, we are interested in knowing whether the question is being answered using information for our knowledge base, or if our app is simply just using the large language model to answer the question.

Looking at the logs for black box app

Now, the problem is that we have no idea what’s happening within this black box. On its own, the question-answer pair doesn’t tell us if the answer comes from knowledge that is embedded within the large language model, or if it comes from the knowledge base connected to our application. Is the answer a hallucination—or something we can trust?

Just looking at the question-answer pair doesn’t give us enough information. If we want to understand how our RAG app got the answer, we’ll need to look under the hood.

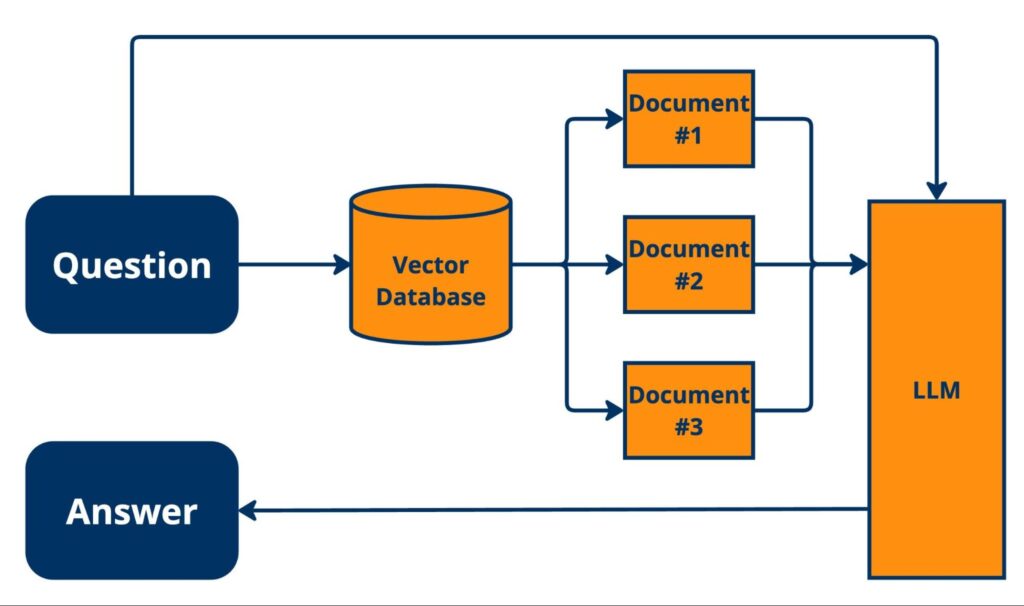

Splitting your RAG app into components

To start, we need to break down our RAG app into components. Let’s assume we have a basic vanilla RAG application that relies on a single information database.

Starting with a question, we look for similar contexts in our vector database and identify a number of documents which hopefully contain the answer to that question. We then pair those documents with the actual question and give it to our LLM, which generates an answer. For this example we’ll identify three documents every time we generate and answer.

All of this is controlled by our instruction prompt, where we tell our application how to behave and what to do.

instruction_prompt_context = """

You are a helpful chatbot designed to provide answers based on the

retrieved documents.

Follow these guidelines:

1. You can only provide responses less than 30 words.

2. Focus on providing insights directly from the retrieved

documents and avoid speculative or unsupported answers.

3. Maintain a professional tone suitable for executives and

avoid technical jargon unless explicitly requested.

Always ensure the output is accurate, relevant, and adheres to these

instructions.

These are the retrieved documents:

"""One of the things that we are telling our application is:

2. Focus on providing insights directly from the retrieved

documents and avoid speculative or unsupported answers.How to test whether or not our application is actually following this guideline is the focus for this blogpost. How to test for instruction 1. is pretty self explanatory (simply check how many words are in the response), and how to test for 3. is something that we will cover in a later blogpost.

Looking at the logs for component app

Now, when we look at the log for a single app usage, we still have the question-answer pair but now we also have a list of all the documents that have been retrieved, along with similarity scores for each of them. For this particular example, the similarity scores are computed using the same embedding model as for the knowledge base and the cosign similarity score.

[{'Question': 'Why do cats meow?',

'Answer': 'Cats typically meow primarily as a form of communication or

attention-seeking, rather than due to hunger or need for food.

Meowing can indicate pleasure, excitement, or affection, often

when a human is nearby or trying to interact with the cat.'

'Doc_1_text: 'A cat almost never meows at another cat, mostly just humans.

Cats typically will spit, purr, and hiss at other cats',

'Doc_1_QD_sim: '0.72',

'Doc_1_DA_sim: '0.73',

'Doc_2_text: ...}]These similarity scores measure the similarities between the question and different pieces of data within the vector database [QD_sim]. This tells us whether or not the information exists within our database.

Another thing we can compute is the similarity between the document that I’ve retrieved and the answer that was generated [DA_sim]. This tells us whether or not the LLM is actually using the information provided to generate an answer. Together, these give us more insight into where the information used to create the answer is coming from.

Creating a baseline

To better understand our RAG app’s behavior through a statistical lens, we need to create a baseline. A baseline is a number of logs that answer questions in the way that we want them to. How we are coming up with our baseline is not something that we are going to cover in this blogpost.

We can create a baseline using real data from our customers, or it can be from a set of questions we generated ourselves—having a sense of what questions should look like and the kind of behavior we want to see.

[{'Question': 'Why do cats meow?',

'Answer': 'Cats typically meow primarily as a form of communication or

attention-seeking, rather than due to hunger or need for food.

Meowing can indicate pleasure, excitement, or affection, often

when a human is nearby or trying to interact with the cat.'

'Doc_1_text: 'A cat almost never meows at another cat, mostly just humans.

Cats typically will spit, purr, and hiss at other cats',

'Doc_1_QD_sim: '0.72',

'Doc_1_DA_sim: '0.73',

'Doc_2_text: ...},

{'Question': 'Can cats get sunburned?',

'Answer': 'Yes, cats can indeed get sunburned. Frequent exposure to the sun,

especially on their white fur and exposed skin areas like ears,

can cause skin damage leading to sunburn.'

'Doc_1_text: 'Cats with white fur and skin on their ears are very prone to

sunburn. Frequent sunburns can lead to skin cancer. Many white

cats need surgery to remove all or part of a cancerous ear.

Preventive measures include sunscreen, or better, keeping the

cat indoors.',

'Doc_1_QD_sim: '0.82',

'Doc_1_DA_sim: '0.88',

'Doc_2_text: ...},]Then we take that baseline and create distributions from the baseline. What we see in the histograms below is what we call a “run” in Distributional, which are the similarity scores for our baseline over a set period of time, such as an hour or a day.

Looking at a single result

Let’s take a look at a single question-answer pair within these distributions, going back to our original question “Why do cats meow?”

[{'Question': 'Why do cats meow?',

'Answer': 'Cats typically meow primarily as a form of communication or

attention-seeking, rather than due to hunger or need for food.

Meowing can indicate pleasure, excitement, or affection, often

when a human is nearby or trying to interact with the cat.'}]The answer seems right to me, but let’s look at the text retrieved from the documents ranked highest for similarity.

These documents look good to me, but let’s introduce an experiment to test this and gain more intuition on what things look like when they go off the rails.

Introducing an experiment

To get an understanding of how it looks, when things are not going as planned, we can introduce a simple experiment, and look at how this differs from our baseline. To do that, we are asking our app a whole bunch of questions about other animals than cats. Similarly, to the baseline we then log the similarity scores and plot those in a histogram (red) together with our baseline (blue).

Here we see that pretty much all of the similarity scores are significantly lower for the animal-questions than the cat-questions, meaning that it is less likely that our RAG-app has found the correct information in our knowledge base to answer the question.

Looking at single result

Let’s take a look at a single log to better understand what’s happening. If we ask a question about fish, the answer seems accurate.

[{'Question': 'Question: What role do cleaner fish play in maintaining the

health of other fish?',

'Answer': 'Answer: Cleaner fish help maintain the health of other fish by

removing parasites and organic waste from their own bodies. This

process is known as biofertilization or biological control.'}]But when we look at our distributions and the text from our similar documents, it’s clear that this answer is not coming from within our database. All of the text referenced is about cats, not fish, and the question-answer pair does not align with our baseline distributions either.

So now, instead of having a human go though all of our production logs and categorize whether or not the LLM is hallucinating, we can use our simple to compute similarity scores the get a good understanding of whether or not that is the case, and significantly reduce the need for humans in the loop.

Introducing production data

Let’s continue to explore how our app responds when we introduce real production data. Although we hope that our users will only ask cat questions, they may start asking questions about other topics. For example, octopuses.

[{'Question': 'Question: How do octopuses camouflage themselves in their

environment?',

'Answer': 'Answer: Octopuses use color-changing cells called

chromatophores to camouflage themselves, making it difficult for

predators to detect them. They also use pattern recognition and

texture matching to blend in with their surroundings.'}]At a quick glance, we can quickly see which question-answer pairs are cause for concern. For the question “How do octopuses camouflage themselves in their environment?” we can see that the documents being retrieved have nothing to do with octopuses. Instead, this answer is being generated with knowledge that the LLM has.

Even though I’ve specified that my app can only use information I’ve provided to generate an answer, it’s clearly going off the rails and generating what presumably is the correct answer using information embedded in the model. While this is likely not too big of a problem for an app focused on cats, it could be very bad for organizations focused on finance, HR, law, or healthcare. Here the stakes are much higher and we don’t want our RAG app to hallucinate answers. Therefore, it is important for companies to have an automated way, similar to what we just walked through, to detect these things at scale.

To make the above insights actionable, we need to start thinking about why the change is occurring. In this particular example, it is pretty clear why things are starting to look different – our users are asking questions from an unintended domain – which can potentially be solved by changing the way our users interact with the app. However, not all changes are a function of our users, a lot of changes come from drift in models and continuous changes to the app. Here we need to start thinking about whether the problem comes from our instruction prompt, our vector database, retrieval algorithms or something completely different. This is what we do at Distributional, helping you understand where and when these changes are happening, and from there give you the tools you need to address the problems.

Automating testing for RAG apps

Tests like these are just a few of many that teams should run to continuously test their RAG apps.

With Distributional, modeling teams can set up and automate statistical tests to help them understand how the performance of their GenAI applications has shifted over time and get alerts when things go off the rails. Our goal is to help teams develop confidence in the AI applications they’re taking to production, so they can rely on more than just a gut check to ensure their applications are working as intended.

Interested in exploring how Distributional can help your organization? Sign up for access to our product or get in touch with our team to learn more.