The AI Software Development Lifecycle: A practical framework for modern AI systems

I recently found myself in a discussion with a group of ML engineers who were debating whether traditional ML development practices could transfer to GenAI applications. The conversation highlighted a crucial point: while there’s significant overlap between traditional ML and GenAI development lifecycles, the unique characteristics of GenAI systems demand a fresh perspective on how we build, deploy, and maintain these applications.

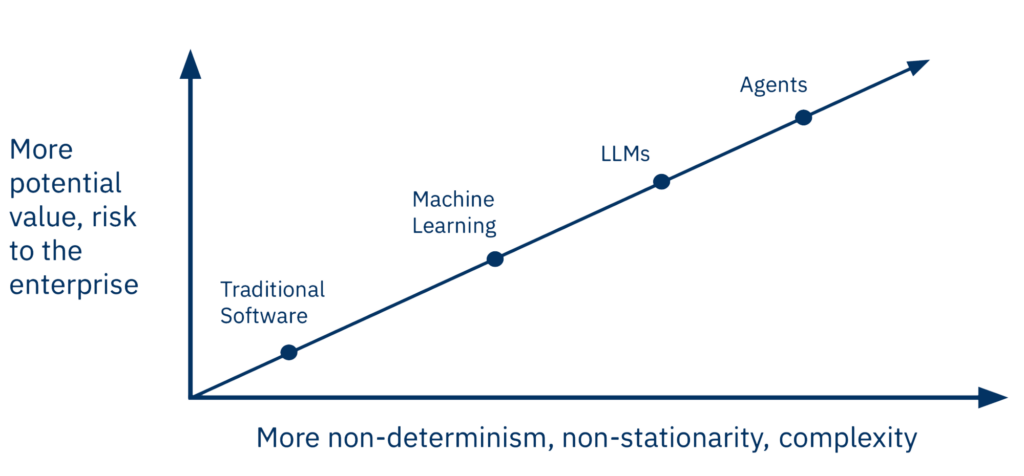

The software development lifecycle (SDLC) is a structured approach to software development that guides development teams through the stages of designing, building, and deploying high-quality software. Its primary goal is to reduce project risks through proactive planning, ensuring that the software meets expectations both during production and throughout its lifecycle. Traditional software development follows a predictable, linear path with clear requirements and deterministic outcomes. Machine learning development shifts the focus to data collection and pre-processing, model training and validation with clear performance metrics, and model versioning. GenAI development introduces a new paradigm centered on prompt engineering and model fine-tuning, managing non-deterministic outputs, evaluating contextual understanding and response quality, and balancing cost with performance.

While traditional software and ML applications rely on concrete metrics and testing, GenAI requires more flexible evaluation methods and continuous adaptation to model changes. The key distinction lies in how risk increases and certainty decreases as we move from traditional software (most certain) to ML (less certain) to GenAI (least certain), requiring increasingly adaptive development approaches.

With over two decades of experience helping to build and drive the evolution from traditional software development to machine learning and now to generative AI, I have been at the forefront of these transformative technological shifts. This experience has shown me the critical importance of establishing a clear framework for the AI Software Development Lifecycle (AI SDLC). While this framework may be simplified, it captures the essential elements that teams need to consider when developing AI systems. Below, I present a simplified version of the AI SDLC and a discussion of the different phases of the lifecycle, including common tooling that many teams use today.

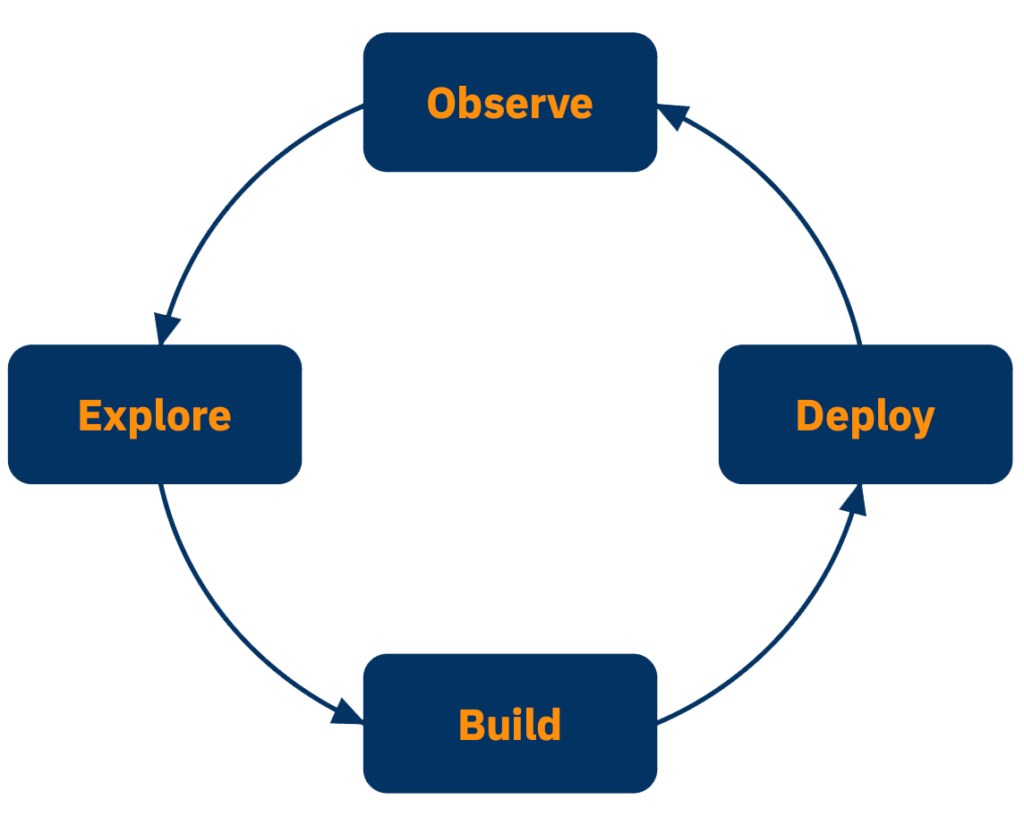

The AI SDLC: A simplified framework

There are four key phases in the simplified view of the AI SDLC: Explore, Build, Deploy, and Observe. Though these phases might resemble the traditional SDLC, the tooling in AI is more complex, and the AI SDLC differs much more significantly at the Deploy and Observe stages due to the non-deterministic nature of GenAI applications.

Explore

The exploration phase involves defining the problem scope, understanding available data, and selecting appropriate model architectures. While this stage remains relatively consistent between ML and GenAI projects, GenAI applications might fast-track this phase, moving directly to building with pre-trained foundation models and prompt engineering. Teams typically leverage basic data analysis tools, IDEs, and visualization frameworks, with GenAI projects potentially incorporating prompt playgrounds for initial experimentation. If you don’t spend enough time understanding and defining the problem, you risk solving the wrong problem. A goal of the explore phase is to gather enough information such that you set yourself up for success at the subsequent phases.

Build

This stage focuses on rapid prototyping and offline evaluation to validate the solution feasibility. The build phase marks a significant divergence between ML and GenAI approaches. Traditional ML emphasizes model training with well-defined performance metrics, while GenAI development centers on iterative prompt engineering and semantic analysis of responses, often with less concrete success metrics.

The development tooling landscape has exploded, with numerous startups offering solutions that offer everything from “prompt playgrounds” and debugging to log viewers and dataset annotation. While some frameworks provide extensive functionality and a polished interface, teams can succeed with a minimal toolset focused on prompt management and basic testing. While this phase is intentionally fluid and experimental, testing during the Build phase typically serves a few different purposes: proactively addressing anticipated deployment constraints, recreating production issues in a development environment for debugging, or working to drive improved performance in responses. Outside of these scenarios, most systematic testing is better suited for the Deploy phase. While the Build phase may appear less structured than traditional software development, its dynamic and exploratory nature is purposeful—enabling teams to rapidly test hypotheses and discover optimal solutions.

Because of this open-ended nature, it is common for AI applications to get stuck at the Build phase—in particular, during the first iteration around the SDLC cycle. If you are able to explicitly define what success looks like before you begin building, that can help provide the confidence you need to feel ready to move on to the next phase.

Deploy

Pre-production deployment involves ensuring application scalability, generalization capability, and stability. While both ML and GenAI systems face similar challenges with multi-component deployments, GenAI applications introduce additional complexity through their inherent non-determinism and potential dependency on third-party LLMs. This necessitates more rigorous consistency checking and validation procedures. Currently, there’s a noticeable gap in specialized deployment tools for AI systems, making testing frameworks crucial for bridging development and production environments.

AI applications are typically multi-component systems, so it is important at the Deploy phase to carefully test where the components fit together in a production-simulated environment. Unexpected outputs out of an LLM, for example, can easily cascade across the system and create instability within other components, potentially causing a system failure.

Observe

The observation phase encompasses monitoring and maintenance of deployed AI systems. While ML and GenAI share common monitoring needs, GenAI presents unique challenges:

- Optimization becomes critical due to reference model costs and a lack of control. For example, if there’s a shift in the distribution of your inputs that causes a large increase in the number of tokens returned by an LLM, you could incur unexpected expenses.

- Unstructured data complicates monitoring and testing. For example, a customer service chatbot needs to be able to recognize misspellings and unexpected input that might not be present in the training or offline testing data.

Non-deterministic behavior requires statistical analysis of metric distributions in order to understand whether something meaningful has changed. For example, if the distribution shifted from unimodal to bimodal, you wouldn’t pick that up looking at averages.

The tooling ecosystem includes inference optimization, routing systems, monitoring platforms, and guardrail implementations. Testing frameworks incorporate and complement these tools by providing continuous validation of system behavior. Without observability, the AI SDLC would be a linear process ending at the deployment step. However, the insights gained at the Observe phase allow us to circle back around, adjusting any assumptions that turned out not to be correct, and improving the models at the Build stage to more closely meet desired expectations and goals.

Moving through the lifecycle

The AI SDLC differs from the traditional SDLC is that teams typically progress through these stages in cycles, rather than linearly. A GenAI project might start with rapid prototyping in the Build phase, circle back to Explore for data analysis, then iterate between Build and Deploy as the application matures. The Observe phase provides continuous feedback that often triggers new cycles through earlier stages.

The iterative nature of the AI SDLC provides a mechanism by which the application can be continuously improved based on insights gleaned in the Observe phase. Without continuous feedback from the Observe phase, there’s a substantial risk that the application does not behave as expected—for example, the output of an LLM could provide nonsensical or incorrect responses.

Additionally, the performance of the application degrades over time as the distributions of the input data shifts.

Looking ahead

The AI SDLC continues to evolve as we gain more experience with GenAI systems. As higher complexity applications of AI (e.g. RAG, agents) get created, the SDLC will evolve along with that. While this simplified framework provides a foundation for development practices, teams should adapt it to their specific needs and constraints.

About Distributional

Distributional is building the platform for AI testing, to help all AI teams gain confidence in the reliability of their applications, no matter how the SDLC evolves. Sign up to get access.