Driving confidence in AI: Understanding deployment testing through model version updates

Building on my previous blog post, I’d like to share more examples to help readers develop intuition around AI testing. This time, rather than focusing on RAG apps, we’ll dive into deployment testing. The goal is to illustrate how deployment testing can provide deeper insights into the consistency of an AI-powered application after it reaches production.

At its core, deployment testing is about understanding the consistency of an AI application over time. This can range from a narrow focus of continuously testing a single LLM-endpoint, to the broader scope of continuously testing an entire AI application.

Regardless of the scope, the goal is to extract a meaningful signal that indicates whether or not something is changing. Interestingly, testing the full AI application is often easier, because we generally have a clearer understanding of what the application is supposed to do. In contrast, testing a single LLM-endpoint can be more challenging, as its expected behavior is harder to define.

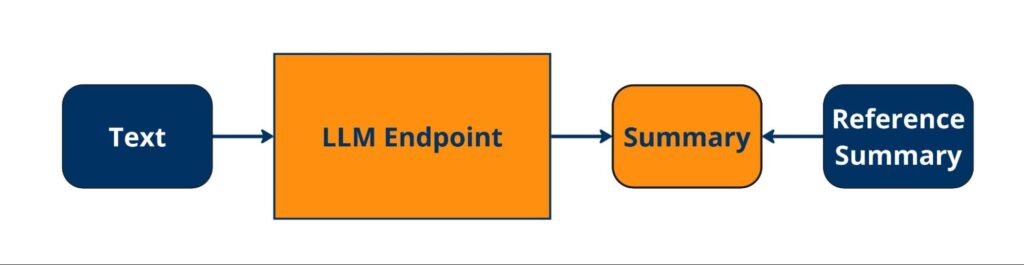

There are multiple ways to extract meaningful signals. I personally like to extract signals through summarization. A simple system diagram for a summarization application is shown below:

Since our focus is on testing a single LLM-endpoint, the goal isn’t to build a world-class summarization application. Instead, the aim is to create a solution that’s reliable enough to extract a meaningful signal of change.

So what is the signal?

The signal comes from the idea that by repeatedly exposing an LLM-endpoint to the same task at a regular cadence, i.e. deployment testing, we can continuously compute and compare a set of metrics over time. The change in these metrics, or the delta, is what I refer to as the signal.

For summarization, the task is to repeatedly summarize the same set of text at regular cadence and compare the summarized answer to a fixed reference answer. To achieve this, we rely on what’s commonly known as a Golden Dataset. For this blog post, I’ve created a simple Golden Dataset consisting of 60 different pieces of text across six categories:

- Economics & Finance

- Health & Medicine

- History

- News

- Scientific Papers

- Technology

Each text is paired with a fixed reference summary. Since the goal is just to extract a meaningful signal, we don’t need a perfect reference summary. Just one that’s good enough for comparison between the reference and the computed summary.

An Economics & Finance example from the Golden Dataset can be seen below:

[{"text": "Inflation has been rising in many countries, driven by supply chain disruptions, increased demand, and higher energy prices. Central banks are grappling with how to respond, balancing the need to control inflation without stifling economic recovery. Experts warn that high inflation could lead to reduced purchasing power and economic instability.",

"reference_summary": "Rising inflation, driven by supply chain issues and higher energy prices, challenges central banks in balancing control with economic recovery."}]And similarly from the Technology category:

[{"text": "Edge computing is transforming how data is processed by moving computation closer to the data source. This reduces latency and enhances performance for applications like autonomous vehicles and real-time data analysis. However, managing and securing decentralized data remains a significant challenge.",

"reference_summary": "Edge computing reduces latency by processing data closer to the source, enhancing performance, though managing and securing decentralized data is challenging."}]Obviously, if we were to test a full AI-powered application, this Golden Dataset should reflect the tasks which we wanted the application to complete. However, for testing a single LLM-endpoint, we instead only need to come up with a meaningful Golden Dataset that ideally reflects the business relying on the LLM-endpoint.

What about the metrics?

When evaluating behavior, relying on a single metric is never sufficient. However, since the focus of this blog is on building intuition, we’ll keep it simple and examine just two key metrics.

For this example, we’ll look at the BLEU and the ROUGE score, which are both well-established NLP-metrics that show differences between two pieces of text. One could imagine expanding these two with all sorts of metrics like toxicity-level and readability scores.

And what about the LLM-endpoint?

From countless conversations I’ve had, there are three primary ways teams access LLMs:

- Third-Party Endpoints – Services like ChatGPT and Together AI that provide external API access.

- Self-Hosted – Teams running their own LLMs on dedicated infrastructure.

- Company-Hosted – Organization-wide initiatives where LLMs are deployed on internal infrastructure for company-wide use.

Each approach comes with its own trade-offs. For this blog, we’ll focus on the company-hosted model and act as an engineering team using a Llama LLM-endpoint provided through a company-wide GenAI platform. This means that we have very little control over the LLM-endpoint, and that we’ll be using what is being provided to us from a different team

Additionally, we’ll be looking at an 11-month time period from May 2024 to March 2025.

Do vibe checks work?

We’ve found that most companies start with basic vibe checks. So, let’s begin by examining a single piece of text alongside its reference summary.

[{"text": "Edge computing is transforming how data is processed by moving computation closer to the data source. This reduces latency and enhances performance for applications like autonomous vehicles and real-time data analysis. However, managing and securing decentralized data remains a significant challenge.",

"reference_summary": "Edge computing reduces latency by processing data closer to the source, enhancing performance, though managing and securing decentralized data is challenging."}]Next, we can query our LLM-endpoint to retrieve and log the summary generated for the above example each month.

May 2024 – Summary of above example

"Edge computing reduces latency and enhances performance by processing data closer to the source, ideal for applications like autonomous vehicles and real-time analysis."June 2024 – Summary of above example

"Edge computing reduces latency and enhances performance by moving computation closer to data sources, ideal for applications like autonomous vehicles and real-time analysis. However, managing and securing decentralized data remains a significant challenge."July 2024 – Summary of above example

"Edge computing reduces latency and enhances performance by moving computation closer to data sources, ideal for applications like autonomous vehicles and real-time analysis. However, managing and securing decentralized data remains a significant challenge."August 2024 – Summary of above example

"Edge computing transforms data processing by moving computation closer to the data source, reducing latency and enhancing performance for applications like autonomous vehicles and real-time data analysis."September 2024 – Summary of above example

"Edge computing is transforming data processing by moving computation closer to the data source, reducing latency and enhancing performance for applications like autonomous vehicles and real-time data analysis."October 2024 – Summary of above example

"Edge computing moves computation closer to data sources, reducing latency and enhancing performance for applications like autonomous vehicles and real-time data analysis, while managing and securing decentralized data remains a significant challenge."November 2024 – Summary of above example

"Edge computing moves computation closer to data sources, reducing latency and enhancing performance for applications like autonomous vehicles and real-time data analysis, but managing and securing decentralized data remains a significant challenge."December 2024 – Summary of above example

"Edge computing moves computation closer to data sources, reducing latency and enhancing performance for applications like autonomous vehicles and real-time data analysis."January 2025 – Summary of above example

"Edge computing reduces latency and enhances performance by processing data closer to its source, but managing and securing decentralized data remains a challenge."February 2025 – Summary of above example

"Edge computing reduces latency and enhances performance by processing data closer to its source, but managing and securing decentralized data remains a challenge."March 2025 – Summary of above example

"Edge computing reduces latency and enhances performance by processing data closer to its source, but managing and securing decentralized data remains a challenge."We can observe that the generated summaries vary from month to month, which aligns to the non-deterministic nature of an LLM. However, as humans, we can’t easily determine whether these differences are purely due to non-determinism or if other factors are at play.

This is where a statistical lens can help!

A statistical perspective?

With our Golden Dataset in place, we no longer need to rely on simple month-over-month vibe checks. We can instead examine the distributions of key metrics and track their evolution over time.

This kind of analysis is at the core of what the Distributional platform enables at scale. However, to build intuition, we’ll continue with the simple two-metric example outlined earlier.

The workflow is as follows:

Each month, we will:

- Generate a summary for each text-reference-summary pair.

- Compute ROUGE and BLEU scores for each reference-summary and generated-summary pair.

- Calculate the average ROUGE and BLEU scores for each subclass in the Golden Dataset.

- Compute the overall average ROUGE and BLEU scores across all data.

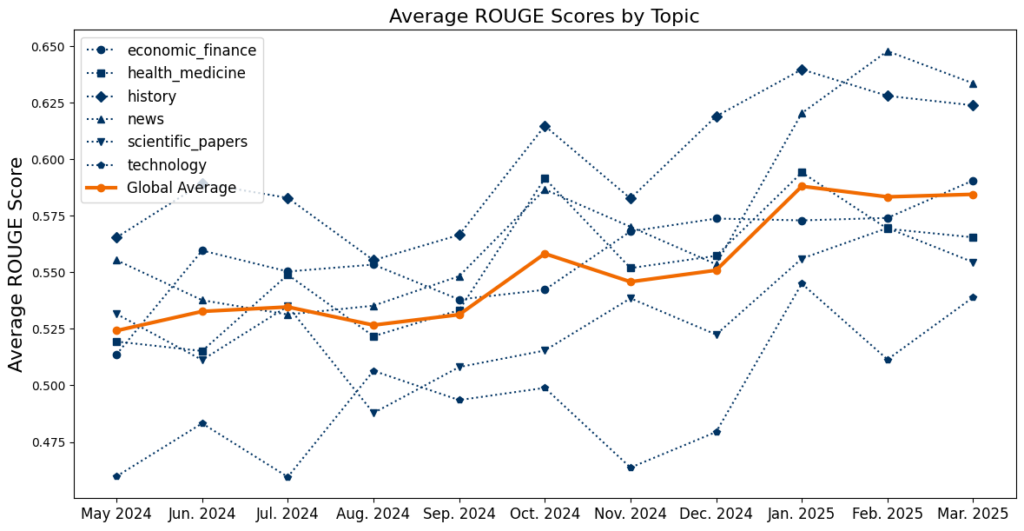

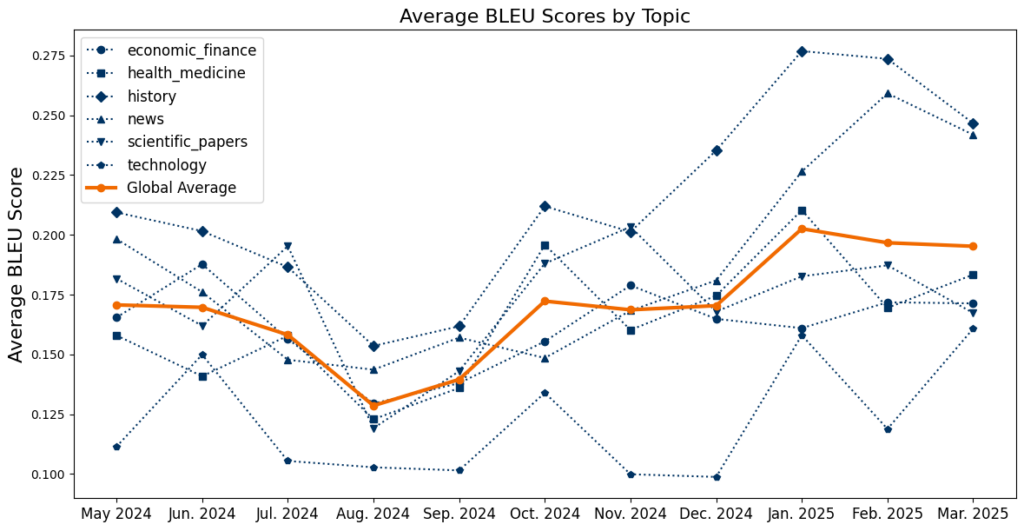

From here, we can visualize how both ROUGE and BLEU scores change month over month for both the entire Golden Dataset and each of the subclasses.

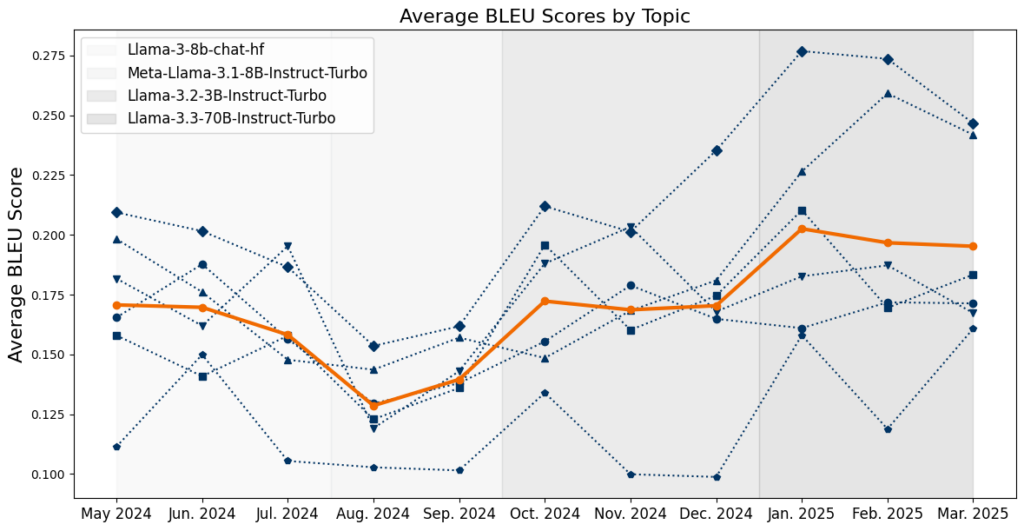

When analyzing the ROUGE score over time, we observe that our AI application is gradually improving. However, its performance isn’t consistent across all subclasses—some are summarized more effectively than others according to our reference answer. Interestingly, as the BLEU score below shows, an improvement in one metric doesn’t necessarily mean all metrics will improve in parallel.

The BLEU score follows a similar trend to the ROUGE score, but at certain points, we observed a regression that wasn’t reflected in the ROUGE metric. This is the exact reason why we want people to think about change in the context of the distributional fingerprint and not just a single metric. The regression is seen on both August – 2024 and September – 2024 for the BLUE score but not for the ROUGE score.

What is happening?

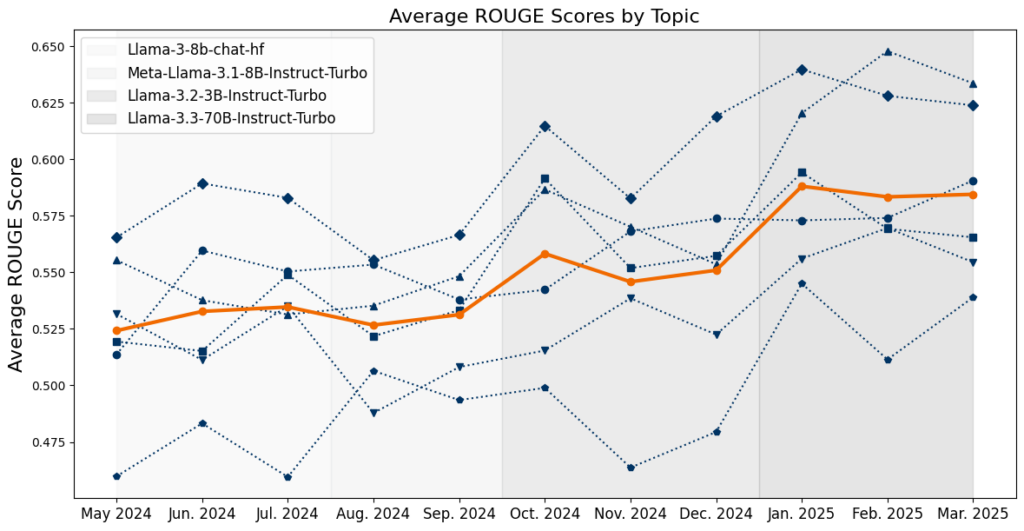

Why are we seeing an increase in performance over time? From the plots below, we can see that the team serving up the LLM-endpoint have been following the Llama release schedule, and have been constantly updating the underlying LLM-endpoint to the most recent Llama model.

Whether or not teams are doing this type of model update out in the wild is not the point—there are plenty of arguments for why one should or shouldn’t do it. What I want to show is that even for simple tasks like summarization, we are capable of getting a really strong indication of whether or not something is changing using the right dataset.

For situations where you are constantly updating models to the newest version, having a system like the above to extract a continuous signal makes a lot of sense. However, for everyone that relies on a model provider for their LLM-endpoints, this is also a simple way to understand whether or not these providers are making changes to their models, and the impact of those changes.

Is there a signal?

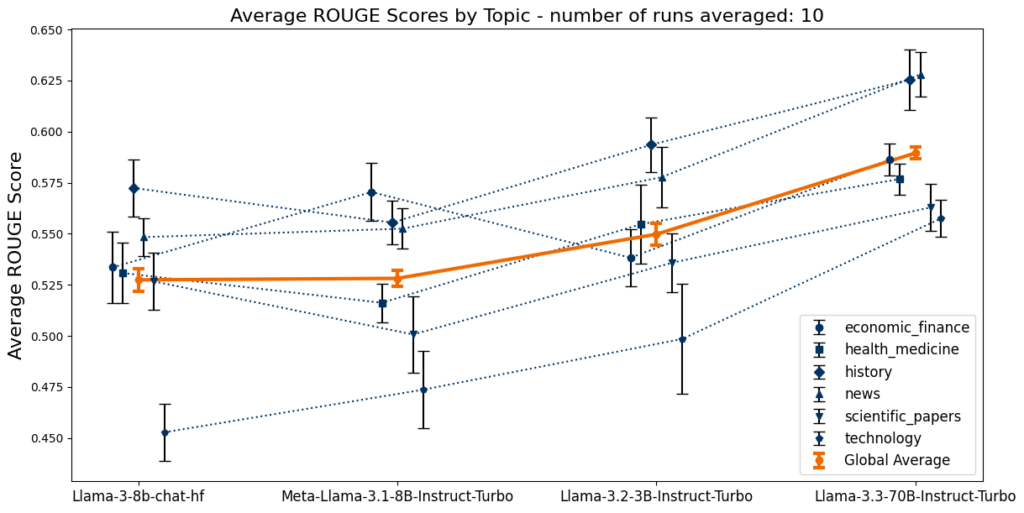

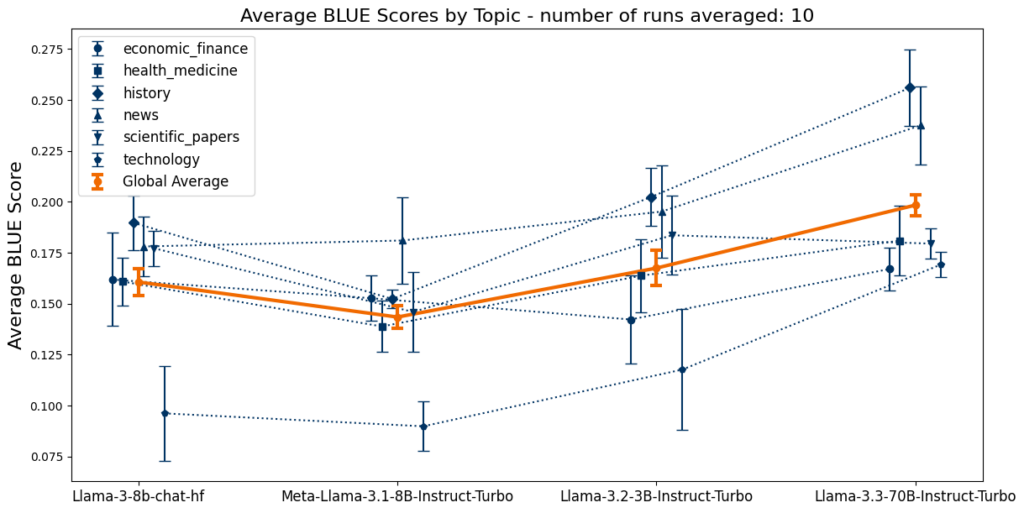

One last thing. It’s fair to ask whether everything we’ve seen so far is just a reflection of the inherent non-determinism of LLMs or if there’s actually a meaningful difference between these models. To dig into that, I ran the data through each LLM 10 times to see if the results truly vary or if we’re just seeing randomness in action.

From the two plots, it is clear that there is a difference between the four models. But whether or not that difference will have an impact on an AI powered application is a topic that we’ll cover in a later blogpost.

Automating deployment testing

Distributional is helping companies define, understand, and improve the reliability of their AI applications. The above scenario represents just a subset of the challenges we’re helping to solve.

Our goal is to help teams develop confidence in the AI applications they’re taking to production, so they can rely on more than just a vibe check to ensure their applications are working as intended.

Interested in exploring how Distributional can help your organization? Sign up for access to our product or get in touch with our team to learn more.